Performance Animation

Recording Setup and Process:

The university uses a collection of programs created by Vicon to capture motion capture data, this collection is called Shogun. Another program called Eclipse, also by Vicon, is used to organise the files created when capturing data.

In Vicon Eclipse a set is created for the current capture session, four separate sessions are then created in this set; CAL (Camera Calibration), ROM (actor Range Of Movement), AM (Morning capture sessions) and PM (Afternoon capture sessions). Once this file system has been setup the capture can then be setup.

A software called Shogun Live is used to record the motion capture. The image above shows the step by step process that you have to follow to calibrate the camera system. Masking requires the capture to be empty, it creates a mask around anything that it picks up in the capture area, this ensures that only the motion capture suit is picked up later. The wave uses a 'wand' (see below), this is used to calibrate the cameras. As the wave is happening Shogun Live displays a mask of the performance area (see below).

Next the wand is placed in the centre of the performance area, this allows you to set the origin of the 3D scene created by Shogun Live. Five markers are then used to create a floor plane, one is placed at the origin in the centre of the performance area and one in each corner of the area, Shogun can then use the positional data from these markers to create a ground plane.

The next step is to apply the markers to the capture suit. There are 53 makers that have to be applied, these markers are mainly placed at major joints on the actors body, the placement of these markers is very important as they will define the rig created in Shogun.

The suit then needs to be calibrated with the cameras and the software, the settings above are used to create a new subject/actor. Once the subject has been created the actor needs to go through a range of movement exercise (see below), this improves the accuracy of the data capture.

Once the subject calibration is completed, the capturing of data can then begin. Below is a collection of recordings.

After the data has been captured it needs to be exported, a program called Shogun Post is used to do this. Using shogun post you can export the captured data as an FBX file, this file can then be read into Maya where it can applied to a character rig.

Data Import and Application:

The video below shows the process of importing the motion capture data and applying it to a rigged character.

As mentioned above the data is exported is an FBX file, this file can be directly imported into Maya. When imported an animated skeleton appears in the viewport, along with several locators showing the location of the tracking points and cameras, however these can be deleted.

A system called HumanIK is used to manage the application of the captured data onto another rig. HumanIK uses a characterisation system to transfer animation data from one rig to another. The above image shows the character definition of the robot rig seen above. The imported rig also has a definition setup. Once a rig has a defined character it can use animation data from another characterised rig, in the screenshot above you can see a 'Source:' option, using the drop down menu you can select the character definition created for the mocap data. However the motion capture rig first needs to be set to a t-pose at the beginning of the animation, as this helps the HumanIK system create a good character definition. A MEL script can be used to do this (See below Left). The animation can then be applied onto the robot rig, once the animation has been applied you can bake it onto a control rig, a control rig is created by HumanIK using the definition to place controllers for joints. Once the animation is baked onto a control rig it can be edited using animation layers. This allows for a non-destructive editing of the motion capture data. (See layer system below right).

Final Render:

Live-action reference:

Assignment 2:

Final Render

Breakdown

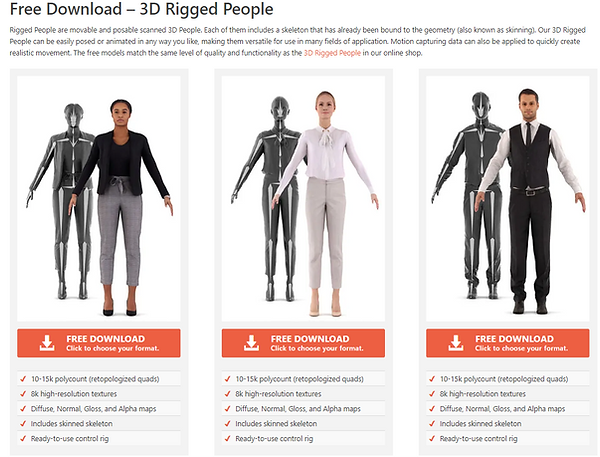

I downloaded two rigged, 3D scanned character models from renderpeople.com. These character models come with a complete HumanIK control rig and character definition, this really helps when it comes to apply motion capture data.

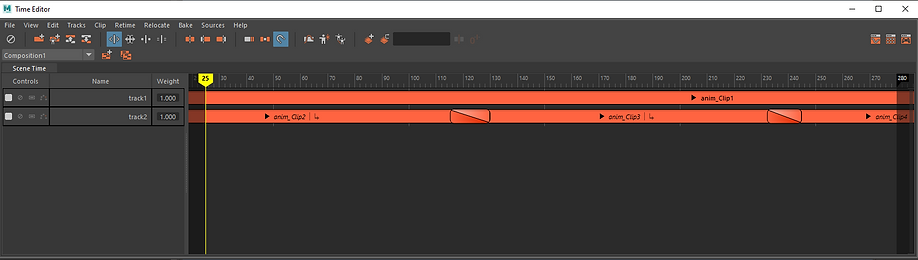

The time editor is a useful non linear editor, it can be used to combine animation clips, this is especially useful when repeating a short motion capture driven animation. The time editor also allows access to the relocator system. This allows you to match parts of animations to other animations using the positions of bones on the animated rigs.

Another useful non linear editor used in this project was the camera sequencer. The camera sequencer gives you a powerful amount of control over creating and structuring a series of shots for a scene. This can allow you to utilise multiple cameras in one scene and be able to pick and choose between different shots.

After rendering I took the shots into Nuke, I then used a grade node to do a basic colour correction and applied motion blur. For the first two shots I signficantly increased the shutter time parameter, from the default of 0.75 up to 5, this adds a huge amount of motion blur to the shots, I did this to try and add the effect of drunkeness, combined with the strange, dutch angle camera shots achieve this effect.

Finally I took the shots into Adobe Premiere Pro. I used the time remapping abilites of Premiere to slow down and double the length of the second shot, as the original render was too fast to be properly read. I then added the music track. The audio fades in at the beggining, and has a low pass filter applied at the start of the second shot.